From Monolith to Event-Driven Architecture

Background

Our backend was originally built as a monolithic REST API running on Amazon ECS. It handled every transaction request directly, performing validation, database operations, and communication with other services in one synchronous loop.

As traffic increased, we started receiving thousands of transaction requests per minute. Even with an Application Load Balancer distributing requests across multiple ECS tasks, the system struggled to handle spikes in demand.

We noticed several problems:

- Slow response times during high traffic

- Database connection saturation from too many concurrent writes

- Frequent timeouts and failed requests

- Difficulty scaling because all parts of the system were tightly connected

We needed to make the architecture more scalable, more efficient, and less dependent on a single process.

The Challenge

In the old design, each API request handled the entire transaction lifecycle:

- Receive and validate the request

- Write data to the database

- Communicate with external services

- Send a response back to the client

This approach worked fine at small scale but became a bottleneck under heavy load. Each request was blocking until all steps were completed. When thousands of users sent requests at once, the ECS tasks hit their CPU and memory limits, and the database became overloaded with open connections.

The Solution

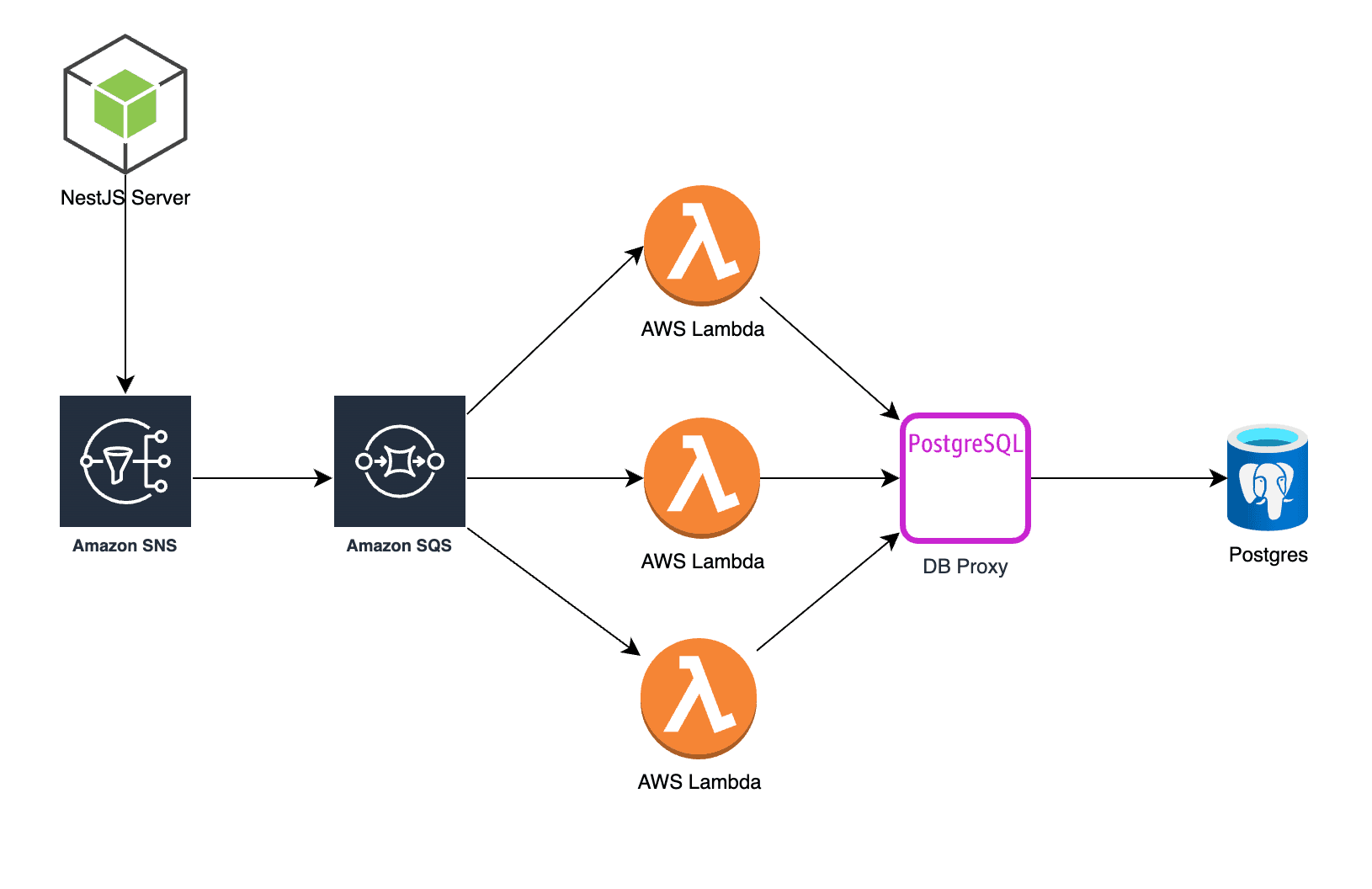

We redesigned the system into an event-driven architecture using Amazon SNS, SQS, Lambda, and a database proxy.

1. SNS for Event Publishing

The ECS REST API now handles lightweight validation and immediately publishes a transaction event to an Amazon SNS topic. Instead of performing all the logic in a single request, the API simply queues the transaction for background processing. This makes the API fast and resilient under heavy load.

2. SQS for Decoupling

Each microservice that needs to process transactions subscribes to the SNS topic through an Amazon SQS queue. This ensures reliable message delivery and allows different services to scale independently. SQS also handles retries and dead-letter queues automatically.

3. Lambda for Asynchronous Processing

Messages from SQS trigger AWS Lambda functions that handle the actual transaction processing. Each Lambda validates the payload, interacts with the database or other services, and logs results. Since Lambdas run independently, they can scale automatically to handle thousands of transactions in parallel without affecting API performance.

4. Database Proxy for Connection Management

We introduced a database proxy layer using Amazon RDS Proxy between the Lambdas and the database. This proxy pools and reuses connections to prevent connection exhaustion when many Lambdas run at the same time. It keeps connections warm and improves both reliability and performance during traffic spikes.

Results

The transformation to an event-driven architecture delivered dramatic improvements across the entire system. Here's what changed:

Non-Blocking API Requests

The REST API no longer waits for transaction processing to complete before responding to clients. Instead, it immediately returns a success response after publishing the event to SNS. This means the API can handle thousands of concurrent requests without blocking, since it doesn't need to finish processing one request before accepting another. Average response times dropped from 2.5 seconds to just 120-150 milliseconds.

Asynchronous Transaction Processing

SQS now processes multiple transactions asynchronously in parallel through Lambda functions. Each transaction is handled independently by its own Lambda instance, which means the system can scale horizontally to process hundreds or thousands of transactions simultaneously. There's no queue bottleneck because Lambda automatically spins up additional instances as needed.

No Database Locks

Database locks are now non-existent thanks to RDS Proxy's connection pooling. The proxy manages a pool of persistent database connections that are shared across Lambda invocations, preventing connection exhaustion and eliminating the lock contention that plagued the monolithic system. Database operations are smooth and consistent, even during peak traffic.

Automatic Scaling

Both ECS and Lambda now scale automatically based on demand. The ECS tasks running the REST API scale up when request volume increases, while Lambda handles transaction processing with built-in auto-scaling. There's no manual intervention required, and the system gracefully handles traffic spikes without degradation. Failed requests dropped from around 8% under load to less than 0.5%.

These improvements transformed the system from a struggling monolith into a resilient, high-performance architecture capable of handling enterprise-scale traffic with ease.

Conclusion

By adopting SNS, SQS, Lambda, and a database proxy, we transformed a tightly coupled monolith into a scalable, event-driven architecture. The system now handles thousands of concurrent requests smoothly while keeping response times low and reliability high.

This redesign not only improved user experience but also gave us a flexible and maintainable foundation for future growth.